Hello! I recently released an app I’ve been working on, Memos AI, and I wanted to talk a bit about how I built this app. Before we dive in, let’s touch on exactly what Memos AI is. Memos AI is a voice memos app that uses artificial intelligence to automatically take notes for you during lectures, meetings, and more. Memos AI will:

- Transcribe speech

- Summarize that transcription

- Take notes based on that transcript

- and more!

Memos AI was built with the PWA Starter, which is PWABuilder’s starter template for building new Progressive Web Apps. If you want to get started yourself, take a look at the PWA Starter Quick Start.

If you want to try the app out for yourself before reading, head to Memos AI to use the app live.

Now, let’s dive into how Memos AI makes use of AI on the web!

AI on the Web

When I started building Memos AI, I evaluated some existing AI solutions that are available for web apps. I was surprised to see multiple open-source options for running AI inference client-side! This, when combined with things like the OpenAI API or Azure for more compute heavy issues, can come together to provide high-quality AI experiences in a PWA.

For Memos AI, the main AI feature is speech-to-text. I wanted to go with a “cloud by default” approach for the best performance across all devices, but with the option for a user to run the AI features client side, on their own device. This ensures that users on mobile devices or less powerful devices overall are not left out of the experience.

Live Speech-To-Text

In the Cloud

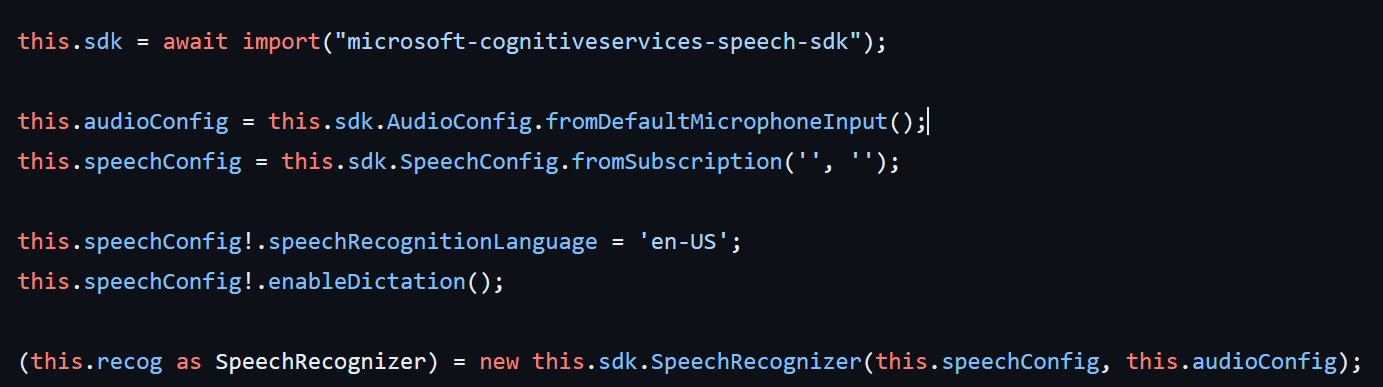

For the live speech-to-text transcript, I am using the Azure Speech SDK which supports JavaScript both in the browser and in Node. The Azure Speech SDK supports live client-side speech-to-text, in the browser, using the users mic on their device. This made it incredibly easy to implement in my app with just some JavaScript:

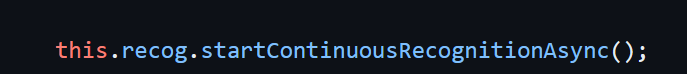

This code sets up a Speech Recognizer, that I can then use to run live client-side speech-to-text by calling the startContinuousRecognitionAsync method.

This works perfectly in my web components based app as it’s just standard JavaScript that would work in any framework.

Client-Side

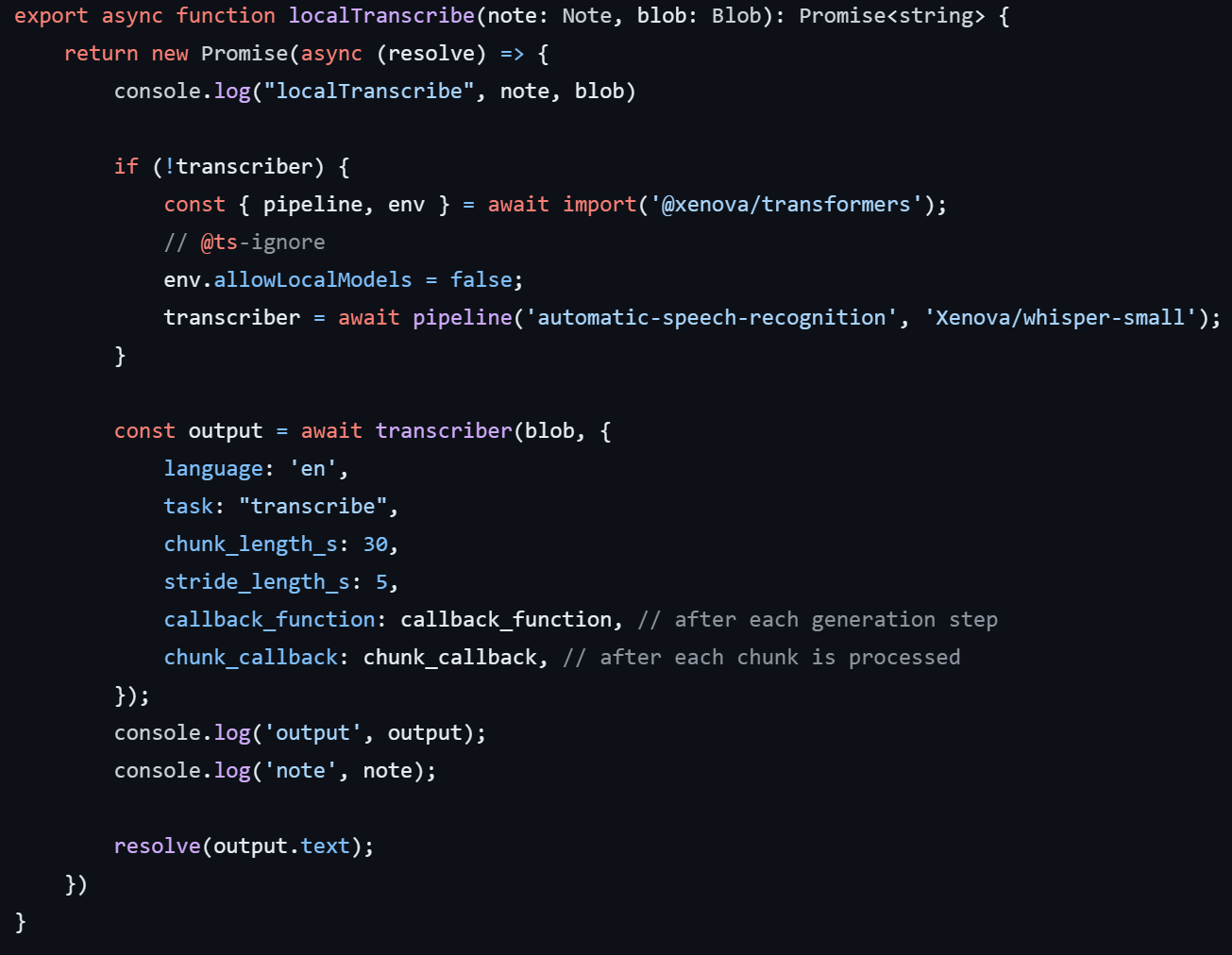

As mentioned above, I found existing solutions for running AI inference client-side in a web app, including the transformers.js which enables the ability to run AI models from HuggingFace client-side in your browser!

While it is possible, I did not set this up to do live speech-to-text, but instead to run on a recorded blob from the note, which I generated with the MediaRecorder API. I then pass this blob to a Web Worker (so as not to block the UI while it is transcribing), which then does local speech-to-text using the following code:

As I mentioned, this code is running in a web worker, which is a built-in way to run code on a different thread than the main UI thread. This ensures that our UI stays responsive while doing heavy work in a worker thread.

And that is how I implemented AI in Memos AI!

For the rest of the app, I used our PWA Starter, with Fluent Web Components for the UI. For animations between pages, I am using the built-in View Transitions API which enables native, built-in animated page transitions.

If you want to learn more about view transitions check out our latest post on the view transitions API here for more detail.

Thanks!